A Robust Interactive Facial Animation Editing System

Motion, Interaction and Games, 2019.

Authors

Eloise Berson (CentraleSupélec, Dynamixyz)

Catherine Soladié (CentraleSupélec, Dynamixyz)

Vincent Barrielle (Dynamixyz)

Abstract

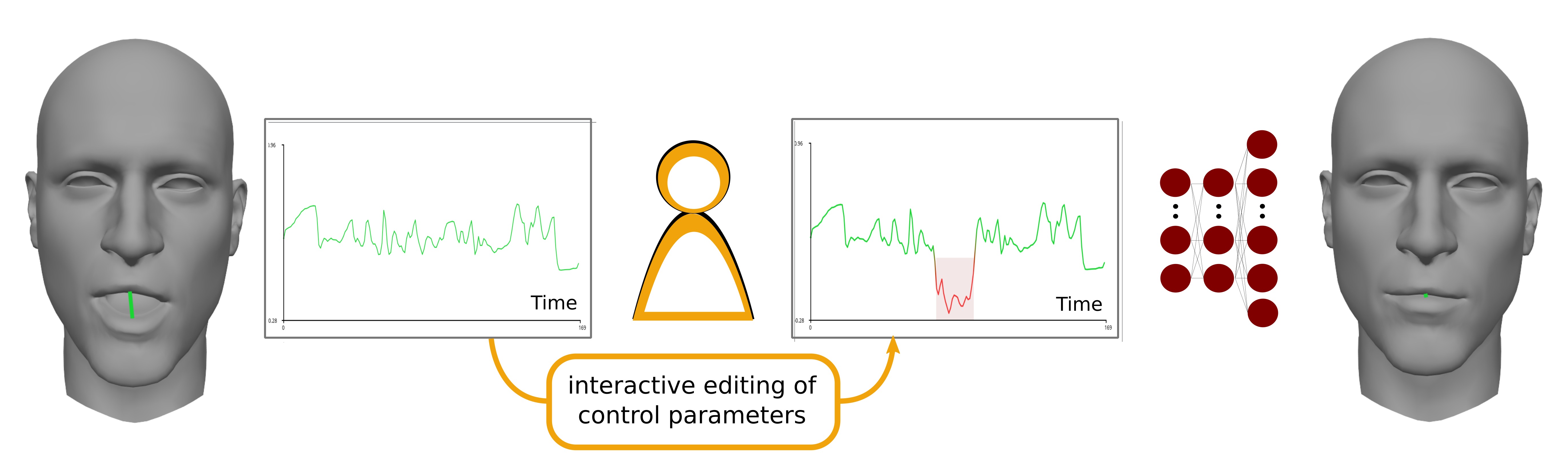

Over the past few years, the automatic generation of facial animation for virtual characters has garnered interest among the animation research and industry communities. Recent research contributions leverage machine-learning approaches to enable impressive capabilities at generating plausible facial animation from audio and/or video signals. However, these approaches do not address the problem of animation edition, meaning the need for correcting an unsatisfactory baseline animation or modifying the animation content itself. In facial animation pipelines, the process of editing an existing animation is just as important and time-consuming as producing a baseline. In this work, we propose a new learning-based approach to easily edit a facial animation from a set of intuitive control parameters. To cope with high-frequency components in facial movements and preserve a temporal coherency in the animation, we use a resolution-preserving fully convolutional neural network that maps control parameters to blendshapes coefficients sequences. We stack an additional resolution-preserving animation autoencoder after the regressor to ensure that the system outputs natural-looking animation. The proposed system is robust and can handle coarse, exaggerated edits from non-specialist users. It also retains the high-frequency motion of the facial animation. The training and the tests are performed on an extension of the B3D(AC)ˆ2 database [10], that we make available with this paper at http://www.rennes.centralesupelec.fr/biwi3D.

Bibtex

@inproceedings{berson_robust_2019,

author = {Berson, Eloise and Soladié, Catherine and Barrielle, Vincent and

Stoiber, Nicolas},

title = {A Robust Interactive Facial Animation Editing System},

booktitle = {Proceedings of the 12th Annual International Conference on Motion, Interaction, and Games},

series = {MIG '19},

year = {2019},

location = {Newcastle-upon-Tyne, United Kingdom},

pages = {26:1--26:10},

articleno = {26},

numpages = {10},

url = {http://doi.acm.org/10.1145/3359566.3360076},

doi = {10.1145/3359566.3360076},

publisher = {ACM},

address = {New York, NY, USA},

keywords = {facial animation, animation editing, neural networks},

}

Dataset

To get the dataset, please send an email to eloise<dot>berson<at>dynamixyz<dot>com or go to http://www.rennes.centralesupelec.fr/biwi3D.